Lattice Basis Reduction Part 1

09 Aug 2018The first part of this series covers what a lattice is, what a basis for a given lattice is, what it means for that basis to be “reduced”, and the LLL algorithm, which gives us a powerful tool to take an arbitrary lattice basis and try to make it as small as possible, which turns out to be useful for mysterious reasons.

Lattices

A lattice (not to be confused with a lettuce) is a subspace of the vector space ; it is the closure of a set of vectors in that space under addition with integer coefficients. If that mouthful sounds too confusing, think of it as a set of evenly spaced grid points, like in the picture below. Given a basis of linearly independent vectors , the lattice that they span is defined as the set .

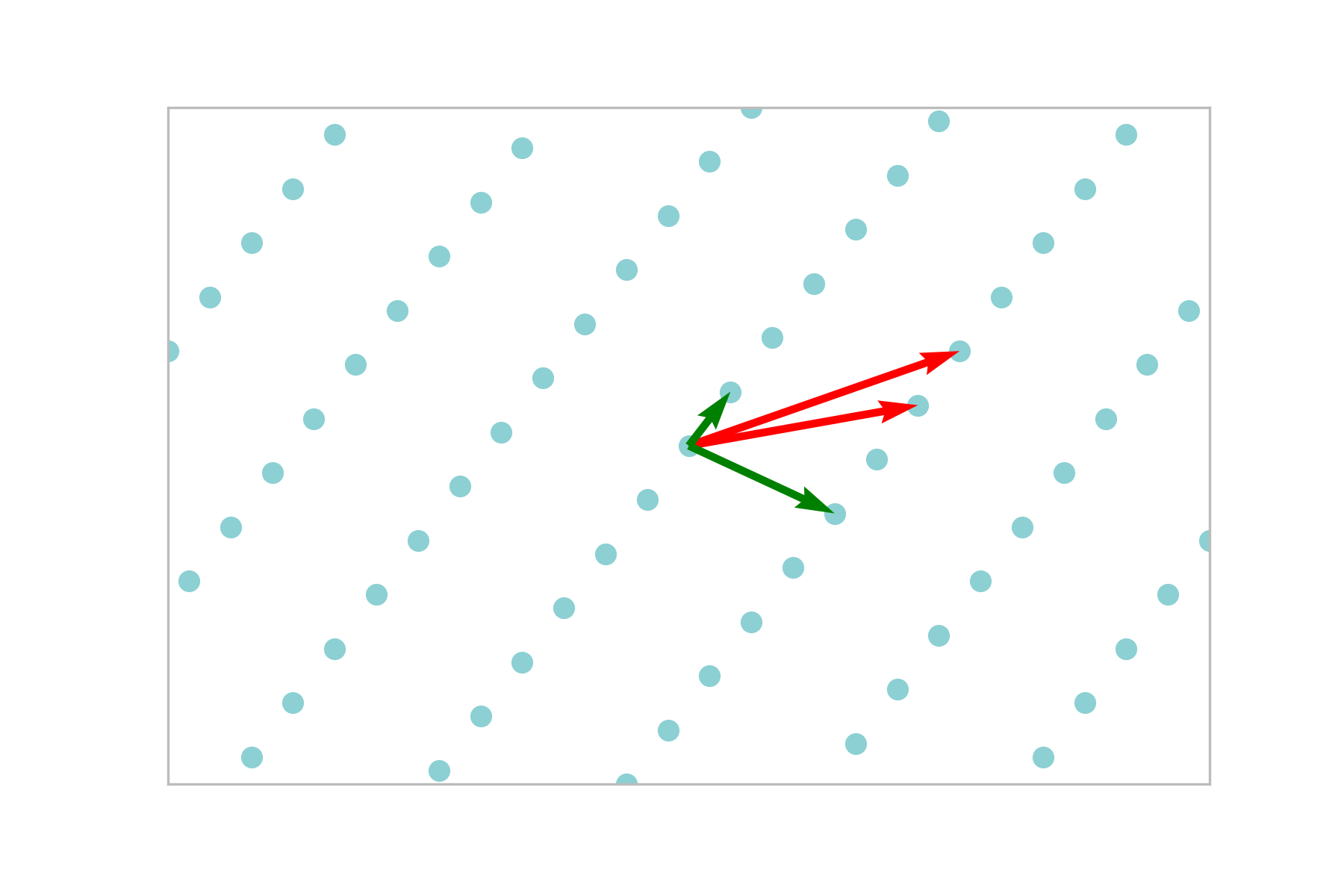

The diagram below depicts an example of a 2-dimensional lattice with basis vectors and . Note that the elements that make up the basis vectors themselves need not be integers.

Each of the other lattice points in blue is generated by some linear combination of these two basis vectors. The basis vectors for a given lattice are not unique. In particular, the two vectors in green form an alternate basis for the same lattice. You will notice that the green vectors are shorter and more orthogonal than the red vectors. It turns out having shorter, more orthogonal basis vectors given an arbitrary lattice makes it much easier to work with them, and indirectly lead to solutions to interesting problems. If you were some kind of grad student doing math, then, you might wonder whether there exists a well-defined procedure that takes an arbitrary lattice basis and produce a shorter basis for the same lattice.

Why would anybody wonder about such a silly thing? As a concrete motivating problem, suppose we would like to find out whether a certain number is algebraic; that is, if it can be expressed as one of the roots to a polynomial of degree . We will use the lattice reducing method discussed below (the famous LLL algorithm) to find an equation such that one of its roots is . The general plan of attack is to set up a lattice such that short vectors in that basis correspond to an equation that contains as a root.

The 2-Dimensional Case

It is easiest to start with the problem for two dimensions; we can readily visualize the vectors involved and rely on our intuition to guide us. Starting with the vectors and , we wish to reduce them as much as possible. That is to say, we’d like to make the two vectors as short as possible without changing their span. How do we know when we are done? As one condition, let us require that . This imposes an ordering on the reduced basis vectors, which is nice to have, because it means we can be sure that given a solution, we aren’t accidentally ignoring other possible solutions. How do we know that we’ve reached the shortest possible vectors? Consider the operations we are allowed to perform on the basis without changing the span of the basis:

- We can exchange two basis vectors; clearly this doesn’t change the span of the basis

- We can replace a basis vector by an integer linear combination of basis vectors

The first operation lets us ensure that the vectors are in increasing order of their norms. The second lets us reduce the norms of the vectors by subtracting appropriate multiples of the other basis vector. Taking inspiration from Euclid’s algorithm for calculating the GCD of two numbers, we start by subtracting as many multiples of the smaller vector as possible from the larger one. Without loss of generality, assume is the smaller vector (we can always exchange vectors to make this the case).

It turns out that taking the projection of the larger vector on to the smaller gives us the largest scalar multiple of the smaller vector we can subtract from the larger without increasing the norm of the resulting vector 1. Our first step, then is to update as follows:

Note that we do not use the projection directly; we must round to the nearest integer so that the resulting vector remains within the lattice. Once we do this, we cannot reduce any further; adding or subtracting any multiple of from now results in a larger vector. If the new is smaller than , we don’t quite yet have the smallest possible basis. We exchange the two vectors and repeat the reduction process until it is no longer possible to reduce . This corresponds to the case where , which means that the nearest integer is 0, implying that subtracting a multiple of from now can only make it bigger. At this point, we stop, ending up with vectors and that satisfy the conditions:

We take these conditions to be the definition of a reduced basis, since we cannot further reduce vectors satisfying these conditions using span-preserving operations. The algorithm above (reduce, exchange, repeat) is due to Gauss, although it is also sometimes called Lagrange’s algorithm.

Generalizing to higher dimensions

Gram Schmidt Orthogonalization Review

For a set of vectors , we define the Gram-Schmidt orthogonalization coefficient . Here, is the result of performing the Gram-Schmidt orthogonalization process on the vector , in the vector space that spans. We have by definition. For the remaining vectors, is the portion of that captures some element of the space spanned by $B$ that the vectors before it do not capture. Translating this into concrete formulae;

Reduction step for LLL

The Gram Schmidt vectors are usually normalized, so that . We leave them un-normalized here; the norms of the vectors are arbitrary. The Gram Schmidt basis gives us a kind of ideal baseline to measure against while reducing the lattice basis; it is the shortest, most orthogonal basis we could have found, unconstrained by the tyranny of integer multiples. It leads somewhat naturally to the definition of an LLL-reduced basis. In the reduction step, we can proceed as in the 2-dimensional case, generalized in the manner of the Gram Schmidt orthogonalization process. We reduce by replacing it as follows:

Where is just the nearest integer function. Now that we have a way to reduce the lengths of our basis vectors, we can define the first condition required for an LLL-reduced basis in higher dimensions, a straightforward extension of the condition for 2 dimensions. We require that $\lvert \mu_{ij} \le \frac{1}{2} \rvert $ for $ 1 \le j < i \le n $, using the same reasoning as we did for the 2 dimensional case that once this condition is met, further norm reduction within the lattice is not possible.

Imposing an ordering

In the 2 dimensional case, we had an obvious way of ordering the basis vectors, essentially comparing vector magnitudes within a plane. In the higher dimensional case, consider the problem of comparing the reduced vectors $b_i$ and $b_{i+1}$. Directly comparing their norms gets us nowhere, since in the ideal case all the reduced vectors have the same (unit) norm and we would be comparing vectors with different dimensions2. Instead, we must compare projections of the vectors onto some subspace in order to get a more varied measure of length that we can then use to compare the vectors. For $b_i$, we use the norm $ \lVert {b_i^*} \rVert $ as the required measure; the length of $b_i$ when it is stripped of the components that lie along the vectors that come before it. Similarly, we strip $b_{i+1}$ of its components along the same vectors , taking the length

The goal here is to normalize both vectors by restricting them to the same subspace; by removing components of the same vectors, we effectively project them into the subspace $S_i$ that is the orthogonal complement of the span of the first $i-1$ vectors, $\text{ span}(b_1, \cdots, b_{i-1})$. This allows us to ensure an ordering using a meaningful measure of length, since the vectors are restricted to the same subspace and therefore have equal rank.

This gives us our ordering condition; we want the first vector to be at most the second vector by the measures we have devised:

The constraint here turns out to be too loose to ensure convergence in polynomial time, so in practice we want the difference between the measures to be larger. It suffices to require that the first vector is at most a small multiple $\delta$ of the second, where it turns out 3 that any $ 1 < \delta < 4 $ will do. For simplicity, we set $\delta = \frac{4}{3}$.

The complete algorithm

Given the two conditions for a reduced basis, the final algorithm pops out almost trivially; starting from the given basis, apply the reduction step. After the reduction step has been performed, check consecutive reduced basis vectors to see if they satisfy the ordering constraint. If they do not, swap the two vectors so that they do. If at the end of this, the reduction constraint has been satisfied, we are done. Otherwise, we repeat the whole thing (reduction, swap if necessary, repeat) until both constraints are satisfied. The original paper 3 proves that this procedure is guaranteed to terminate in polynomial time, resulting in a reduced basis. It also proves additional constraints on the basis, showing that it is “short” in the sense that the shortest vector in the basis is at most exponentially larger than the shortest vector in the entire lattice. The exponential bound may not seem all that impressive, but it tends to work fairly well in practice, and we will see later that it is good enough to solve other problems of interest.

-

Consider the inner product of a potentially reduced vector; . In order to minimize this expression, take the derivative of the inner product with respect to and set it to zero, giving ↩

-

Technically speaking, we would be comparing vectors that are projections into subspaces with different ranks; $ b_i^* $ is the projection of $b_i$ into the orthogonal complement of $\text{span}(b_1, \cdots, b_{i-1})$ which is of rank $n-i+1$, and $b_{i+1}^* $ is the the projection of $b_{i+1}$ into the orthogonal complement of $\text{span}(b_1, \cdots, b_{i})$ which is of rank $n-i$. This would effectively be the same as comparing vectors of different dimensions, in the sense that the second vector would be predictably biased by having an extra coordinate’s worth of freedom to vary in, affecting the norm. ↩

-

Lenstra, Arjen Klaas, Hendrik Willem Lenstra, and László Lovász. “Factoring polynomials with rational coefficients.” Mathematische Annalen 261.4 (1982): 515-534. ↩ ↩2